I’m not quite sure why, but once again I’m writing about test roles. I don’t know of another job in the world where discussions like these are common. On the other hand, I don’t know of a job in the world where people are so passionate about what they do (and don’t do) as part of their role. I’ll chalk it up to the continued growth of the role and see if I can convince myself to finish this and post it before I stop myself.

Here’s the short version for those already bored with the topic. Roles that testers play on teams vary. They vary a lot. You can’t compare them. That’s ok, and (IMO) part of the growth of the role. I find it disturbing and detrimental when testers not only assume that their version of testing is “the way”, or that some roles (or people in those roles) do not qualify as testing.

And now for the longer version… The recent test is dead meme (which interestingly, won’t die) brought to light (in semi-dramatic fashion) that in some situations, some traditional “test” roles don’t exist anymore. It wasn’t originally phrased that way, but if you looked under the covers, that’s all that was there. I’m still surprised that so many people got stuck on the three-word catch phrase and couldn’t see the value in the statement. But if they did, I suppose I may not be spitting out this blog post.

Last year, I had a surprisingly popular post about My Job as a Tester. I’ve changed roles since then, and I’ve been thinking about an update, but for a variety of reasons, I’m just not ready yet. The biggest reason is that although I’ve been on the team for five months now, my role is still evolving. Once it settles into a bit of a groove (and as other factors resolve), I’m sure I’ll post a recap.

Recently, I’ve been working a lot on pieces of implementation of test infrastructure for my team. Although I’m still heavily involved in testing strategy and test “stuff”, the goal of most of my current work is to enable good testing. Since I sometimes describe my role as an improver of tests, testers, and testing, I’m still on target with my own vision.

While reflecting recently on what I’ve been doing vs. what [anyone else] does as a tester (and catching up on reading, I pondered the fact that what I’m doing now isn’t really testing as “traditionally” defined (whatever that means). However, what I do is making testing better – but am I more of a “productivity engineer” than a tester? Brent Jensen has this description:

A tester’s job is to accelerate the achievement of shippable quality

By that definition, I suppose I’m right on the money. But I know there are people (who will likely tell me I’m damaging the craft, or that I’m mean to them) who don’t call what I do testing – I’m cool with that. I still like my job and my business card still says “Tester”.

By far, my favorite favorite thing to do as a tester is design tests. I love the challenge of crafting a suite of tests that enable team members to make well-informed decisions about product quality (at least that’s the plan). Testers in this role may be part of a whole-team approach where they have a test/quality focus, but have shared team goals. Or, there may still be “devs” and “testers”, but the wall between the two is minimal, and everyone works together (most of the time, at least) to make sure the product achieves both shipping and quality goals. Brent’s definition works pretty well for this role too.

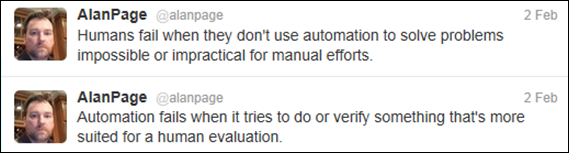

Design overlaps execution. The Think-Design-Execute loop is tight in good testing – this is true whether it’s entirely, partially, or non-automated (or inversely, entirely, partially, or non-manual).

Which leads me to two test roles that, while they definitely exist, I could say they’re dead to me. But they’re not really dead – I know that. What they are is more…irrelevant. Given what I do, where I do it, and a smattering of other context indicators, two test roles are off of my radar.

The first is the test-automation only role. I think the role of taking manual test scripts written by one person and then automating those steps is a bad practice. I know some people like to do this stuff, but I think it’s a waste of time. What you end up with are tests that either should have been automated in the first place, or tests that should not be automated. Fortunately, while I acknowledge that these roles exist, I’m happy to work in a world where these roles do not exist.

For lack of a better term, I’ll call the final role “waterfall-tester” – even though I know this role exists at some (fr)agile shops as well. This is the when-I’m-done-writing-it-you-can-test-it role. Test outsourcing is the most common manifestation of this role, but it exists anywhere where testers only provide value at the end of the cycle. I know great fantastic testers who love this role, and I’ve been in this role myself in the distant past. Today, however, I don’t want to think about testing something where my contribution hasn’t been part of the end-result from the earliest stages. Again, while I fully acknowledge that testers live in this role, I’m happy that it isn’t part of my testing world.

In the end, I’m not exactly sure what this means to anyone but me. As I’ve mentioned (and tweeted) before:

Which really says, that in nearly a thousand words, I’ve (once again) told you nothing new.