I’ve stated my distaste for GUI automation before, but after my last post, I feel the need to share some clarification. I’ve been meaning to write this post ever since I wrote GUI Shmooey, so here goes.

First off, let me state my main points for disliking GUI automation:

- It’s (typically) fragile – tests tend to break / stop working / work unsuccessfully often

- It rarely lasts through multiple versions of a project (another aspect of fragility)

- It’s freakin’ hard to automate UI (and keep track of state, verify, etc.)

- Available tools are weak to moderate (this is arguable, depending on what you want to do with the tools).

Let’s ignore the last point for now. Partially because it’s a debatable point, but more importantly because it’s a potentially solvable point (one potential improvement would be if vendors would market these tools as test authoring tools rather than record and playback tools).

The first two points above have more to do with test design than the authoring of the tests. It’s possible to write robust (or at least less fragile) tests that work as the product evolves. Designing tests with abstractions and layers and other good design principles will help immensely. Doing this right drives the third point home. Designing robust GUI automation is difficult. Of course it can be done well, but I’m not completely convinced it’s worth the ROI.

That’s why it’s important when designing any test to think about the entire test space and use automation where it helps you discover information about the application under test that would be impractical or expensive to test otherwise. I hate loathe automated tests that walk through a standard user scenario (click the button, check the result, click the button, check the next result, etc.) But I love GUI automation that can automatically explore variations of a GUI based task flow. The problem is that the latter solution requires design skills that many testers don’t consider.

Another example where I like GUI automation is in stress or performance issues. A manual test that presses a button a thousand times is out of the question, but it’s perfect for an automated scenario. In fact, I wrote about something similar in HWTSAM.

Brute force UI automation

In most cases, UI automation that accesses controls through a model or similar methods tests just as well as automation that accesses the UI through button clicks and key presses. Occasionally, however, automating purely through the model can miss critical bugs.Several years ago, a spinoff of the Windows CE team was working on a project called the Windows Powered Smart Display. This device was a flat screen monitor that also functioned as a thin client for terminal services. When the monitor was undocked from the workstation, it would connect back to the workstation using a terminal server client.

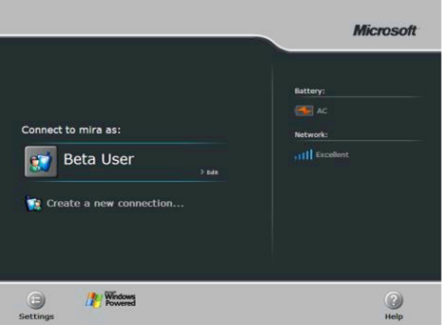

The software on the device was primarily composed of core Windows CE components, but also contained a simple user interface that showed a history of connected servers, battery life, connection speed, and any local applications, as shown in the following graphic. The CE components were all well tested, and the small test team assigned to the device tested several user scenarios in addition to some manual functionality testing. Toward the end of the product cycle, I spent some time working with the team, helping them develop some additional tests.

One of the first things I wanted to do was create a few simple tests that I could run overnight to find any issues with the software that might not show up for days or weeks for a typical user. There was no object model for the application, and no other testability features, but given the simplicity of the application and the amount of time I had, I dove in to what I call brute force UI automation. I quickly wrote some code that could find each of the individual windows on the single screen that made up the application. I remember that I was going to look up the specific Windows message that this program used, but I’d hit a roadblock waiting for access to the source code. I’ve never been a fan of waiting around, so I decided to write brute-force code that would center the mouse over the position of the control on the screen and send a mouse click to that point on the screen. After a few minutes of debugging and testing, I had a simple application that would randomly connect to any available server, verify that the connection was successfully established, and then terminate the terminal server session.

I configured the application to loop infinitely, started it, and then let it run while I took care of a final few odds and ends before heading home for the day. I glanced over my shoulder once in a while, happy to see the application connecting and disconnecting every few seconds. However, just as I stood up to leave, I turned around to take one final look at my test application and I saw that it had crashed. I happened to be running under the debugger and noticed that the crash was caused by a memory leak, and the application was out of a particular Windows resource. At first, I thought it was a problem in my application, so I spent some time scanning the source code looking for any place where items had been using that type of resource or perhaps where I was misusing a Windows API.

I couldn’t find anything wrong but still thought the problem must be mine, and that I might have caused the problem during one of my earlier debugging sessions. I rebooted the device, set up the tests to run again, and walked out the door.When I got to work the next morning, the first thing I noticed was that the application had crashed again in the same place. By this time, I had access to the source code for the application, and after spending about an hour debugging the problem. The problem turned out to not be in the connection code, but in the graphics code. Every time one of the computer names was selected, the application initiated code that did custom drawing. It was just a small blue flash so that the user would know that the application had recognized the mouse click, much like the way that a button in a Windows-based application appears to sink when pressed. The problem in this application was that every time the test and drawing code ran, there was a resource leak. After a few hundred connections, the resource leak was big enough that the application would crash when the custom drawing code executed.

I don’t think I ever would have found this bug if I had been writing UI automation that executed functionality without going through the UI directly. I think that in most cases, the best solution for robust UI automation is a method that accesses controls without interacting with the UI, but now, I always keep my eye on any code in the user interface that does more than the functionality that the UI represents.

To be clear, I am not completely against GUI automation, but I do think that it’s easy (too easy) to get wrong, and that it’s overrated and undervalued among may testers. The best part about my opinion is that if you disagree with me, you have to write good GUI automation. In that case, we all win.

**For the heck of it, I’ve included a screen shot of the app that’s missing from the book (this was the best image I had, and I didn’t think it was good enough to publish).

I wonder for reasons of fragility in UI automation and let’s see what I can conjure:

1. This is because UI is the least cared about thing in early stages and it keeps changing with time till the last moment? But then that’s nothing to do with automation it’s about fragility of the UI aspect itself.

2. This is because testing on UI is layers-above in the architecture and possibility of failures multiply? Again, this is not automation specific, but product problem.

3. Is it that tools we use are not mature? That requires deeper analysis and knowledge, but at the outset I don’t see this as the major problem.

Seems that I have failed in my attempt to put finger on what really leads to this fragility in UI Automation, but what becomes clear is that UI automation has to continously keep changing along with the software it tests and good design can help with making this change less painful or automatic.

“I hate loathe automated tests that walk through a standard user scenario (click the button, check the result, click the button, check the next result, etc.)”

Would you rather sit there and perform those scenarios every day once the build comes out? That’s a prime benefit to automation, in my mind!

Neither.

Either write code that automates those tasks from the object model (ideally in a unit test for that code), or, if appropriate, let people (or users) find any problems in clicking buttons during normal use.

Use code to help you do things that are impossible (or difficult) for humans to do – not to replace arbitrary usage scenarios.

Okay, so you don’t MIND that type of automation, you just view it differently. That makes more sense. It’s still invaluable to actually have that coverage be automated.

Most of my job at Microsoft is that exact type of automation — identify the typical user scenarios for a given feature, and develop an automated test to cover it.

At least, most of my time writing code is spent writing code for that purpose. The amount of time I spend writing code out of my entire time working is unfortunately much lower than I’d like.

Wild guess, but do you work in Office?

IE. Does Office have a similar approach? (Didn’t realize you could nest comments here, btw!)