After releasing The A Word, I didn’t plan on writing any more posts about automation. But, after pondering transitions in test, and after reading this post from Noah Sussman, I have a thought in my head that I need to share.

I don’t think testers should write automation. ![]()

I suppose I better explain myself.

All automation isn’t created equal. Automation works wonderfully for short confirmatory or validation tests. Unit, functional, acceptance, integration tests, and all other “short” tests lend themselves very well to automation. But I think it’s wasteful and inefficient to have testers write this automation – this should be written by the code owners. Testing your own code (IMO) improves design, prevents regression, and takes much, much less time than passing code off to another team to test.

That leaves the test team to write automation for end-to-end scenarios. There’s nothing wrong with that…except that writing end to end automated tests is hard (especially, as Noah points out, at the GUI level). The goal of automation (as touted by many of the vendors), is to enable running a bunch of tests automatically, so that testers will have more time for hands-on testing. In reality, I think that most test teams spend half of their time writing automation, half of their time maintaining and debugging automation, and half of their time doing everything else.

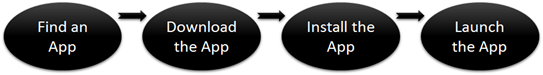

Let’s look at a typical scenario.

Pretty easy – you only need to automate three actions, add a bit of validation and you’re done!

If you’ve attempted something like this before, you know that’s not the whole story. A good automated test doesn’t just execute a set of user actions and then go on it’s way – you need to look at error conditions, and try to write the test in a way that prevents it from breaking.

It’s hard. It’s fragile. It’s a pain in the ass. So I say, stop doing it.

“But Alan – we have to test the scenario so that we know it works for our customers”. I believe that you want to know that the scenario works – but as much as you try to be the customer, nobody is a better representative of the customer than the customer. And – validating scenarios is quite a bit easier if we let the customers do it.

NOTE: I acknowledge that for some contexts, letting the customers generate usage data for an untested scenario is an inappropriate choice. But even when I’ve already tested the scenario, I still want (need!) to know what the customer experience is. As a tester, I can be the voice of the customer, but I am NOT the customer.

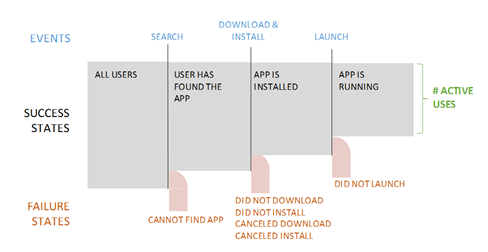

Now – let’s look at the same scenario:

But this time, instead of writing an automated test, I’ve added code (sometimes called instrumentation) to the product code that let’s me know when I’ve taken an action related to the scenario. While we’re at it, let’s add more instrumentation at every error path in the code.

Now, when a user attempts the scenario, you could get logs something like this:

05072014-1601-3143: Search started for FizzBuzz 05072014-1601-3655: Download started for FizzBuzz 05072014-1602-2765: Install started for FizzBuzz 05072014-1603-1103: FizzBuzz launched successfully

or

05072014-1723-2143: Search started for FizzBuzz 05072014-1723-3655: Download started for FizzBuzz 05072014-1723-2945: ERROR 115: Connection Lost

or

05072014-1819-3563: Search started for FizzBuzz 05072014-1819-3635: ERROR 119: Store not available.

From this, you can begin to evaluate scenario success by looking at how many people get through the entire scenario, and how many fail for particular errors.

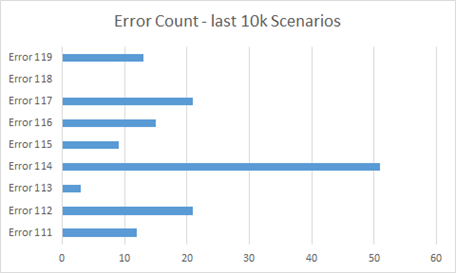

or generate data like this:

And now we have information about our product that’s directly related to real customer usage, and the code to enable it is substantially more simple and easy to maintain than the traditional scenario automation.

![]() A more precise way to put, I don’t think testers should write automation. is – I think that many testers in some contexts don’t need to write automation anymore. Developers should write more automation, and testers should try to learn more from the product in use. Use this approach in financial applications at your own risk!

A more precise way to put, I don’t think testers should write automation. is – I think that many testers in some contexts don’t need to write automation anymore. Developers should write more automation, and testers should try to learn more from the product in use. Use this approach in financial applications at your own risk!

” In reality, I think that most test teams spend half of their time writing automation, half of their time maintaining and debugging automation, and half of their time doing everything else.”

Your post was worth it for that line alone. So true, as someone who got into this business as a GUI automator.

…and half the time waiting for an answer to their automation question ( usually Selenium) on SQA StackExchange

It takes more time spending on automation and burden on testers who are working on product manually.

That is a great sentence… been there, and that’s part of the reason I created MetaAutomation.

I pretty much agree with this. I am more of a fan of end to end automation than most, but I think that the same programmers who write the production code are the best people to code that automation. And you may not need any end to end automation if you have useful regression at lower levels, especially if you are guiding development with examples and executable tests and leaving some of those tests in your regression suite. I’m a big fan of the living doc that comes out of those types of tests.

I love automating tests, but I finally realized I was never going to be that good at it when I can only spend maybe 20% of my time doing that. Creating well-designed, maintainable code takes a lot of continual practice IME.

My team currently has to do a lot of manual regression each release in spite of extensive suites of automation from unit level up to UI level. We couldn’t live without UI automated tests because we have a JS app with a lot of crazy stuff like concurrent updates and caching and stuff. But IMO we could be automating more, because I really dislike doing manual regression testing. Manual exploratory testing is fine and fun, regression is just drudgery to me.

Is there no option for regression testing other than writing the code automation (not preferably manual exploratory techniques)

In this agile world, if the development was given for 30 hours and testing for 8 hours. Do Developers prefer to do “Write Automation for their own code”? – I dont think so..

Most of devs, dont test their own code – refactor etc.

And, if the customer is the one to test – Then testers would get no work 🙂

So…you’re saying that developers shouldn’t test their own code because it will put testers out of a job?

regarding “you may not need any end to end automation ” –> I would still prefer to be able to do the end to end automation. The example provided in the post may be primitive to quote an instance, but consider the similar end to end automation considering the transaction systems, the shopping cart experience, the enterprise commerce systems, where the revenue data, the customer data transactions flowing through. Consider the areas where there are external system dependencies – for instance the Travel Ticketing system buying the Tickets and transition that data into hotel booking and the rental car – they are complex systems by themselves, and ensuring the data quality. Same goes with Quoting to Contracts to Ordering, Invoicing and Fulfilments. Are the items shipped to the right locations where multiple parties involved (viz., channel partner, distributors, fulfilment vendors, and finally the End customer providing different ShipTo address information for different items on the same purchase order, and top of these the different Tax codes and calculations)

I agree with the post, where these are some of the data quality areas where end2end is absolute must that the testers should be thinking, testing and finding feasibilities automating such scenarios. The vertical applications would often make changes to their signatures, or systems , at times without realizing the dependent systems that might break due to a simple change in upstream.

Agreed, exploratory testing on these end to end systems is usually fun and time consuming on the data analysis part. Performance plays a key role in how fast the data can be transitioned from one system to another, and especially if they are from different organizations and different companies, that depends on how well the data transition architecture is designed and so on.

As to who creates the automation, it depends on the team IMO, but the automation must be written in a way that works and enhances communication around the team on quality issues.

I agree on need for bottom-up automated verifications and E2E automated verifications, but especially with latter, it’s important to do it in a way that’s robust as possible and hides the one-off failures due to race conditions.

agree on “living document” and MetaAutomation gives the ultimate on this because the hierarchical check steps themselves are self-documenting. The XML documents created at each check run documents clearly what works, what doesn’t, and what is blocked, with milliseconds-to-step-completion for each check step (whether or not there are child steps).

Agree on dislike of the manual-testing regression drudgery. Consider using MetaAutomation (starting with the Atomic Check pattern) to take care of that drudgery for you and make the manual testing more interesting. This will find and communicate failures much faster, which again shortens the communication loop between devs, QA, and leaders of the org.

I agree with the main idea – testers shouldn’t write the automated tests. I also agree that automating UI level tests exercising the whole system in order to “do what the user does” is a big waste of time. But I don’t think “get the actual user to do it for you” is the whole picture.

Many UI level “scenario” tests are often used as a catch-all net for issues that are more effectively caught lower down the stack with more targeted automated testing. Sometimes we don’t even need to test against the entire stack to answer the question we’re asking. Being smarter about what we automate and at what level is part of the solution.

The other part is recognising that by automating tests at a UI level, we are not “being the user”. If we want to find out what the user does, we can log actions as you suggest. If we want to discover possible user pathways through the system we should analyse the system design, model the workflows and make sure our implementation plan accounts for these flows.

This way we take a naive approach (trying to automate what users might do) and turn it into a considered approach (automating as much as we can to account for specific concerns, examining possible user pathways in our system design and monitoring actual usage to feed back into our design and testing).

Medical and airplane software should also not follow this approach.

In all seriousness, I like this approach, but there are certain things that are hard to verify with instrumentation:

– UI changes.

– Accessibility.

– Refactoring code to make it more readable.

For accessibility, you could get data but it could be too small to actually make a difference on the stats. What are your thoughts on these?

From your experience in Xbox:

– Any idea how video quality was tested for Skype? how did they verify the app actually displayed a 1080p video with a good connection?

– For UI changes, how could one argue the impact of changing the hue of the green color on Xbox or the sounds when moving through the dashboard?

roberto- the list is not limited to Medical and airplane software. You can include embedded devices, carrier grade communication equipment, enterprise IT equipment and many more.

I was shocked to learn that even NASA apply hot patches to production software. That is, software patches are applied over the wire, to systems that are inside robots that are themselves floating through outer space!

This is in addition to *years* of pre-launch testing.

We do test airplanes in production – it is called the “test flight”

>> For UI changes, how could one argue the impact of changing

>> the hue of the green color on Xbox or the sounds when

>> moving through the dashboard?

That one is easy! Online controlled experimentation…. you may have heard it called A/B Testing.

Agree on A/B testing, but NO to testing planes in production with “test flight.” Do you know how many times Boeing flew the 787 with instrumentation before paying passengers got on board?

I agree, you don’t have to write automation for a light game e.g. Angry Birds. Also, DDQ (instrumentation, TIP, analytics, …) is very powerful for all cloud products.

But, somebody has to write automation to regress quality before the customer sees it for apps with more impact or even just more PII.

Hi Alan!

I would like to point out that context is what matters.

What does it actually mean that developers write automation test code for they code? Does it mean that they know how to use automation framework or does it mean that they know the testing concepts that will drive design of their automation code?

In order to do effective automation testing in their context, developers should know about raise conditions, boundary testing, flow control, code coverage (there are 101 types of code coverage, not only statement coverage).

Software tester could be in position that he should educate software developers about those testing concepts.

Thanks for writing this post that triggered this important conversation.

Regards, KArlo.

One major problem with relying on users is that now you have to go find users who are willing to be subject to pre-alpha, alpha, beta versions. Many times in big companies people are running around trying to get their product tested through users in other parts of the companies – this reduces risk of customer backlash esp. for enterprise products. For free products, sure something can be in beta form and consumers are a bit more tolerant since the app is free – however if the product has serious issues such as with installing the app – good luck trying to convince the user to give the product a 2nd chance 🙂

It is possibly that automation may always be playing catch up but you need a min. to ascertain quality of the systems. More often than not, if I am committed to making my product better – and if I had that extra time, that time is better spent understanding code (possibly for augmenting tests or even instrumentation) or debugging sticky issues for my own product rather than randomly subjecting yourself to trying different scenarios in products that you are strictly a consumer. How many times would you get users to try scenarios outside the mainline flow?

Further more – speaking to instrumentation – I would turn this around and ask why does the Dev not do this himself. After all it leads to improved understanding of usage and analytics. So if the Dev is also doing this what is then the role of the Test owner 🙂

John – you’d be surprised how often this is occurring already. For web sites with a back end (amazon, Netflix, facebook, etc.), updates are deployed (often “untested”) all-the-time. In that case, remember that the tracing happens on the server. But even in the case of desktop applications, any time you’ve selected an option in an app to “improve this app by sending data to“, you’re collecting this sort of data.

And yes, I would prefer that Dev adds the instrumentation. Test will still write some long tests (stress, perf, etc.), but will also spend time analyzing the data (customer data is the new test-pass results :})

Hi John!

Regarding your statement:

” How many times would you get users to try scenarios outside the mainline flow?”

That is true. But as testers we want to find out from users not ALTERNATIVE flows to our scenarios, we want to to find out NEW SCENARIOS, ones that we have not thought off.

Regards, KArlo.

I like the error graph approach, how does it arrive ?

Test done well is a more complex challenge than dev done well, because testing has to monitor more different things and more abstract factors through the SDLC or release cycle. It follows that if you have people who are able to do the automation well who are NOT tasked with also creating the product, you’d lose value if you just tried to have the developers do the same thing; devs are creating the product and that is challenge enough. For devs to write automation that’s more than unit tests might be a distraction from their main focus.

If the team has people doing test role who can also write good automation (which means, grok MetaAutomation) then it’s better to have the devs focus on developing the product.

Alan this is a post that puts into words some ideas I have been struggling with for a while! Thanks especially for the diagrams — I’ll be referencing these in the future!

The only thing I agree with is that unit testing should be done by developers. UI automation testing should remain with testers, automation testing is NOT a replacement for manual testing it is a tool to help testers. Developers should not be writing automation tests for function, acceptance and integration testing because they wrote the code.

Instead of painting the whole of test with one brush you should be applying your theories at SDLC settings where testers writing UI automation test don’t work.

A good manual tester will ask the developer a lot questions about the AUT to better understand the system thereby enabling them to write very good manual test cases. So does this mean developers should write manual test cases too?????

Tester are detectives it is our JOB to ask questions, to say people who have the answers should do the job is like saying there is no need for testers in software development after all developers know what they wrote so they should be writing the manual test cases.

Your article is annoying, testing as a profession is fighting for its rightful place in software development, ideas like yours don’t help.

Alan, I don’t think this is a good argument for testers not writing automation. I think it’s a good argument for not automating tests that are a long series of potentially complex actions. While those “complex tests” may be normal for interactive scripted testing, they don’t work so well for automated tests.

Who writes the automated tests seems the wrong question, to me. What tests should be automated is a better one. You can write good automated tests that check the things your long-running tests check, but do so in terms of specifying discrete capabilities of the system. These tests will interact more with the system than do the long-running ones, but when that’s done by a machine rather than a person, this is not a problem.

As to who writes them? Let the tester and programmer figure out the best way to divide the work.

Filling the logs with trace messages makes them generally useless for noticing production issues because of all the noise. I’ve seen systems where logging took an undue toll on the performance because of the large number of such messages. There are, in my opinion, better ways of approaching logging, too.

Yep – you’re correct. it’s just that I’ve already ranted extensively about people trying to automate things that shouldn’t be automated, so rather than rant again about the infection, I chose to amputate.

There’s plenty of automation and coding tasks left for testers if they stop wasting their time (perf, stress, monitoring,tools, etc.).

I simplified the logging, but plenty of companies use the approaches I mentioned above to track usage from millions of users. It’s definitely not an approach for everyone, but it /is/ a viable option.

Alan, your post makes much more sense to me when I assume that when you use the word “automation” your meaning is “automate the SUT according to steps from a manual test, use case or user story.” That way, “automation” excludes the checks that James Bach writes about.

A “check” is an automated procedure that verifies specific aspects of business behavior. It’s not the same as an automated manual test, because it’s explicit about not “testing” things that an automated test can’t reasonably test anyway. Bach writes that testing is a “sentient” activity, and I agree: you need people to test. Automation can’t be smart enough to “test” anything. Automation can check, though.

Actually, I think the ongoing semantic evolution that leads to the discovery that “automated test” is a contradiction in terms is a good thing for the profession, and for software quality in general!

Which tests provide new information BY MANUALLY EXECUTING the test steps? Probably should not be automated. Long user cases should not necessarily be automated because a lot of information is lost in automating.

Which tests provide new information ONLY IN THE END RESULTS of the test? Probably should be automated. Since the act of writing the automation for these tests doesn’t change perspective on the issue, whether a developer or tester writes this code depends on the company. It could be the development team is swamped with new code, bug fixes, or other projects and don’t have the bandwidth to write this automation. Though they are arguably better at writing the automation, there’s no value lost in having testers write this.

Which tests provide new information THROUGH THE ACT OF WRITING AUTOMATION for a test? Probably should be automated by the Developers, because they’re the people we’re trying to provide information to as quickly as possible. The act of coding automation changes your perspective to the issue, and opens new possibilities for test. It also shifts mentality towards thinking like a developer, and can the tester ever be better developer-think than the developer herself? If another developer thinking on the project would provide value, why not have a developer run/automate the tests instead of testers?

Which part of a test case provides information on the value of the project, and to whom is that information valuable?

So what you are saying is – testers need not write automation and it should be done by developers themselves. The title of the post is misleading. You might have to change it.

Thank you Alan, I do love hyperbole!

I’m not all that interested in who should write UI automation… the answer almost certainly lives somewhere between who CAN write it, and who WANTS to write it.

I’m VERY interested in discussing the value of automation… do feel free to hit me up for a chat. I’d really enjoy talking about that.

I like the sentence “I don’t think testers should write automation” in all true and practical sense. However I would like to understand Why testers should not write automation? Is it because they can not? or developers are better positioned to write automation?

I guess some products can allow many users to fail while using the SW in production just to be able to figure out some things are not working…

But other products might just loose their business if not tested initially to verify that most scenarios are working well – before releasing to production.

Would you like your Bank for instance to use that postmortem approach?

Kobi Halperin @halperinko

I can not agree with most contexts. Some concepts of testing and automation are incorrect.

1, Why let tester to develop test scripts? Dependent tester can detect more defects than developer, whatever by manually or automation. But I agree with automation unit test should be down by developer due to they understand the code logic.

2, Automation can run during off hours; Automation can run 7 * 24 hours; Automation can run in many environments at the same time; Automation can run and generate report faster; automation can save effort during test execution. Those are all test automation’s advantages.

3, In a new release test, automation test should run the regression test, the changes should be tested by manual. Can save many time and effort.

4, Automation test is a type of testing with high risk, if the tester has no enough experience and skill to setup a good framework, the automation test project will fail probably.

Completely agree with this, Alan. Great article.

1) In all the discussion on testing people lose site of the classic Dijkstra quote (in 1970 !!)

Program testing can be used to show the presence of bugs, but never to show their absence

2) In any case in my experience, invariably tests are never written with less care than “real code” – otherwise it does take too long.

One memorable experience – the requirements was that a certain value be configurable, and then we needed to change the configurable value from 3 to 5.

Developer – “but I hard coded the 200 unit tests to 3”

Also, when do you test that “configuration” actually works ? How much effort do you invest to do it ?

Awesome, I share the same view and I am really happy to read this blog. I was struggling for more than a year to implement this approach (design & architecture solutions for testability in production, instrumentation, simulation) in my team and finally convinced the management to agree for this approach. We have convinced the management by doing load testing in production

We have started implementing this, but the challenge is how to quantify this approach?(as it is hard to quantify the value of testing). It would be really helpful if you can share your thoughts about quantifying

You should rename Test automation to GUI based test automation. Test automation is vast area and GUI is one of the key part. I am completely agree, GUI based automation is fragile. It is more debugging & maintenance than actual testing. But in projects, there lots of areas there where automation checks(i dont call it tests) has no alternatives. And, unless you are an expert tester, it is always a bad idea to move to automation.

Amazing, I have a very similar take on that matter. In fact I wrote an article a week ago about my experience and ho I think that as a Developer I can have a greater impact in the quality of the product. Take a look I would appreciate your thoughts on it: https://hackernoon.com/love-quality-become-a-developer-9339d60dbcd6#.ij5x88xrh

We completely agree and practice this methodology internally. Since we don’t use Selenium or write complex code, our developers have time to author E2E tests.