I’ve been thinking about writing this post for years, but have had it floating around between my head and a draft for the last few months. I’ve held on to the post because even though it’s not my intent, I’m probably going to piss off someone who takes my points the wrong way or misinterprets what I say.I’m still not sure where I’m going with it, but I need to try to get these thoughts out sometime, and now is as good of a time as any.

I’m worried about the future of software testing. To be fair, I’m probably as much to blame as others, but I’m so bothered about this that I’m actually beginning to get a bit depressed. But I suppose I should explain myself before I rant any more – so let’s start with the current state of software testing.

But before that (and in the hope of diffusing the hard message a bit), let me say that I’m quite happy with what’s been happening in software testing recently. The “voice” of software testing is gaining momentum, and there are many active participants in discussing the basics of software testing so that the new crop of testers has a strong base of knowledge for the future. We have brilliant people emerging in the world of software testing, and I love the passion they have for the craft. It’s great to see so many testers online discussing their experiences and working hard to hone their skills. But we’re still talking about the same stuff testers were talking about ten years ago. The testing community is abuzz with discussions about basic tester skills, basic automation approaches and basic approaches to measurement. The discussions are lively, and people are learning – but they’re learning the same things testers were learning last year, the year before that, and the year before that. I like presenting and attending conferences like STAR and other similar conferences – but those conferences are targeted at new testers, and talk topics are dominated by the same things topics that have been around for years. I checked out the STAR conference proceedings from ten years ago (you can look too), and it looks remarkably similar to this years conference. In some cases, the arguments and points are more refined than ten years ago, but a remarkable number of those talks could just as easily show up on any conference program this year.

The state of the art in software testing will go nowhere if we never look beyond the basics. Highlighting flaws in simple applications written by the IT department of a small company (or parking garage) is interesting, and it develops basic skills, but it does nothing to help us understand better ways to test huge interconnected systems or services under constant load. The future software systems shown in many science fiction movies may never come to light – and it won’t be because of technology or cost factors – it will be because we won’t know how to test these systems adequately.

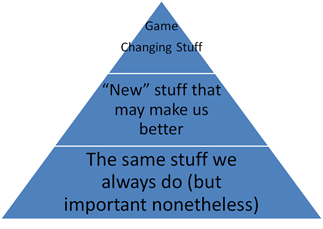

One way to view the current body of knowledge in software testing something like the triangle below. At the bottom of the triangle is the “101” level of software testing. This is important stuff. It’s the basis of the skills, approaches, and techniques that are the core of software testing. My triangle is disproportionate, as his is probably where 99% of the software testers spend their time (ok – to be fair, some never even make it on to the triangle). The big problem here, as I said above, is that this enables the advancement of our future, but does little to nothing to help the future.

The middle section of the triangle – new ideas and approaches do occur semi-frequently, but we more often than not view them as game-changers when the impact on the future is relatively minimal. Like the bottom section of the triangle, we need thinking in this area, but it’s not going to take us where we need to go. The top section of the triangle contains the true game changers. The new thoughts (or more likely, thoughts and ideas from some other area applied to testing) that will bring us into a new era.

Let me return to my worry (and a problem I mentioned above). My fear is this: The software testing we are doing today will be inadequate for the software of tomorrow. It doesn’t matter how good we are at the basic skills, it won’t be enough. What I fear is going to happen is that we will go ahead and create the software of tomorrow but we’ll try to test it like we test software today. For example’s sake, envision the systems of “Minority Report” – most of the pieces of technology in this movie have been demonstrated, but never with the flawless interconnection that Hollywood showed us, Do we have the skills and tools (and people) to test such a system today? Not. Even. Close.

What I’m afraid will happen, is that we’ll eventually build a similar system, and we will try to test it with the tools, approaches, processes (and many of the people) who are testing software today.

And there will be massive software failure, and very bad things will happen. At least then we may find enough motivation to advance the state of the art in testing.

But we don’t have to wait. If you’ve take a reasonably close look at the software of today you know that we could use some innovation and advancement now. The right thing to do is to start moving beyond the basics and figure out how we can do better testing today. How do we test massively complex systems? How do we do so efficiently? Are the software testers of today the right people to test the software of the future?

Moving up the triangle is a hard move to make. Testing consultants make their money in the bottom of the triangle, so why would they have any motivation to make a move outside of the cash flow? But it’s not the consultant’s fault either. The testing profession has a high enough turnover that there are a huge number of new testers every year looking to get their foothold in the bottom rung of that triangle. Also consider that most of the testers today aren’t testing huge systems used by millions of users – they’re testing one-off IT apps used by hundreds (or dozens) of people who don’t care if the software has a few minor issues. There’s no need (or time) for most of these testers to ever get out of the bottom rung of the triangle.

It’s vicious circle, but we have to find a way out.The future depends on it.

I wish I could say that my next blog post will have all the answers. (it won’t). Instead, I’m going to continue to study, learn, and experiment. Somewhere out there is the knowledge that we’ll need to survive the software of the future, and I want to be part of making that future software successful. I hope that some of you can help make it happen as well.

It’s OK and can even be good to worry, but not too much; the ways in which we need to test the future products probably have to be invented as that software is created.

It would be interesting to know which types of bugs you think we will miss.

Is it failures when software/features of interoperates; reliability problems; security issues, compatibility concerns, missing features, incorrect calculations, error-causing un-usability, scalability failures on world-wide launch, or all of these and other things as well?

I think the solutions will be somewhere in semi-automation/testability area.

Human skill combined with technology/development advancement.

I also think the game-changing ideas won’t come from inside software testing alone; it will emerge through collaboration and/or by people with knowledge from diverse areas.

It requires a lot of hard work, and a lot of creativity, but I have hope.

Thanks for the comment – you’re right, that we may not need to worry much, but my tester pessimism often gets the best of me.

Some examples would definitely be good – I’ll dedicate one of my next posts to some discussion.