After releasing The A Word, I didn’t plan on writing any more posts about automation. But, after pondering transitions in test, and after reading this post from Noah Sussman, I have a thought in my head that I need to share.

I don’t think testers should write automation. ![]()

I suppose I better explain myself.

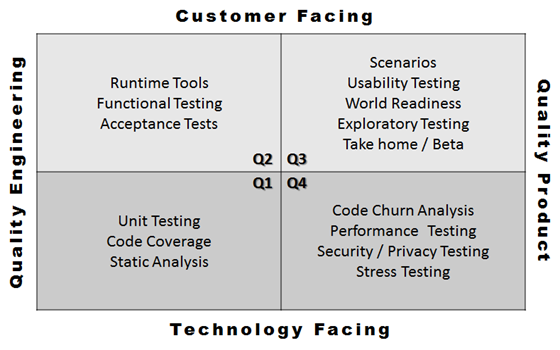

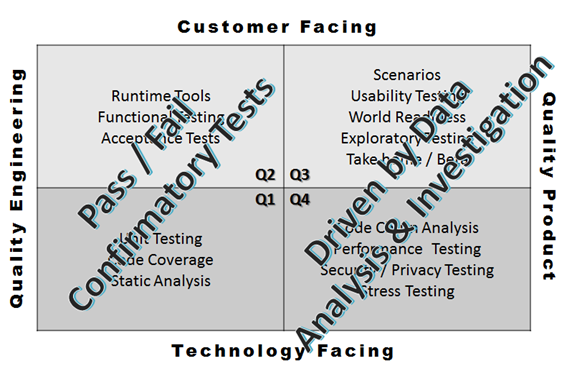

All automation isn’t created equal. Automation works wonderfully for short confirmatory or validation tests. Unit, functional, acceptance, integration tests, and all other “short” tests lend themselves very well to automation. But I think it’s wasteful and inefficient to have testers write this automation – this should be written by the code owners. Testing your own code (IMO) improves design, prevents regression, and takes much, much less time than passing code off to another team to test.

That leaves the test team to write automation for end-to-end scenarios. There’s nothing wrong with that…except that writing end to end automated tests is hard (especially, as Noah points out, at the GUI level). The goal of automation (as touted by many of the vendors), is to enable running a bunch of tests automatically, so that testers will have more time for hands-on testing. In reality, I think that most test teams spend half of their time writing automation, half of their time maintaining and debugging automation, and half of their time doing everything else.

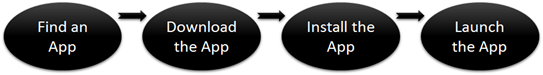

Let’s look at a typical scenario.

Pretty easy – you only need to automate three actions, add a bit of validation and you’re done!

If you’ve attempted something like this before, you know that’s not the whole story. A good automated test doesn’t just execute a set of user actions and then go on it’s way – you need to look at error conditions, and try to write the test in a way that prevents it from breaking.

It’s hard. It’s fragile. It’s a pain in the ass. So I say, stop doing it.

“But Alan – we have to test the scenario so that we know it works for our customers”. I believe that you want to know that the scenario works – but as much as you try to be the customer, nobody is a better representative of the customer than the customer. And – validating scenarios is quite a bit easier if we let the customers do it.

NOTE: I acknowledge that for some contexts, letting the customers generate usage data for an untested scenario is an inappropriate choice. But even when I’ve already tested the scenario, I still want (need!) to know what the customer experience is. As a tester, I can be the voice of the customer, but I am NOT the customer.

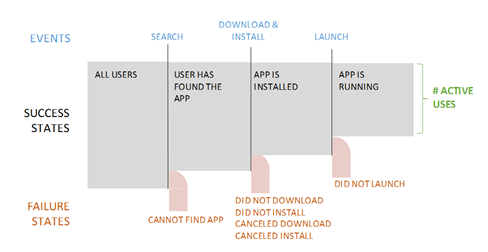

Now – let’s look at the same scenario:

But this time, instead of writing an automated test, I’ve added code (sometimes called instrumentation) to the product code that let’s me know when I’ve taken an action related to the scenario. While we’re at it, let’s add more instrumentation at every error path in the code.

Now, when a user attempts the scenario, you could get logs something like this:

05072014-1601-3143: Search started for FizzBuzz 05072014-1601-3655: Download started for FizzBuzz 05072014-1602-2765: Install started for FizzBuzz 05072014-1603-1103: FizzBuzz launched successfully

or

05072014-1723-2143: Search started for FizzBuzz 05072014-1723-3655: Download started for FizzBuzz 05072014-1723-2945: ERROR 115: Connection Lost

or

05072014-1819-3563: Search started for FizzBuzz 05072014-1819-3635: ERROR 119: Store not available.

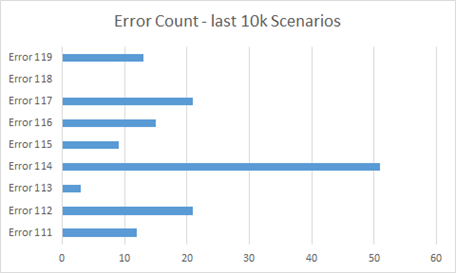

From this, you can begin to evaluate scenario success by looking at how many people get through the entire scenario, and how many fail for particular errors.

or generate data like this:

And now we have information about our product that’s directly related to real customer usage, and the code to enable it is substantially more simple and easy to maintain than the traditional scenario automation.

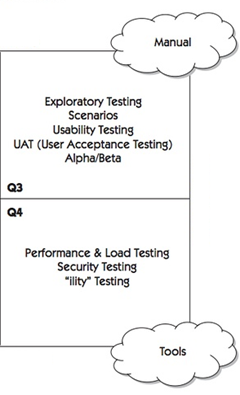

![]() A more precise way to put, I don’t think testers should write automation. is – I think that many testers in some contexts don’t need to write automation anymore. Developers should write more automation, and testers should try to learn more from the product in use. Use this approach in financial applications at your own risk!

A more precise way to put, I don’t think testers should write automation. is – I think that many testers in some contexts don’t need to write automation anymore. Developers should write more automation, and testers should try to learn more from the product in use. Use this approach in financial applications at your own risk!